The Driving Force Behind the Digital Revolution

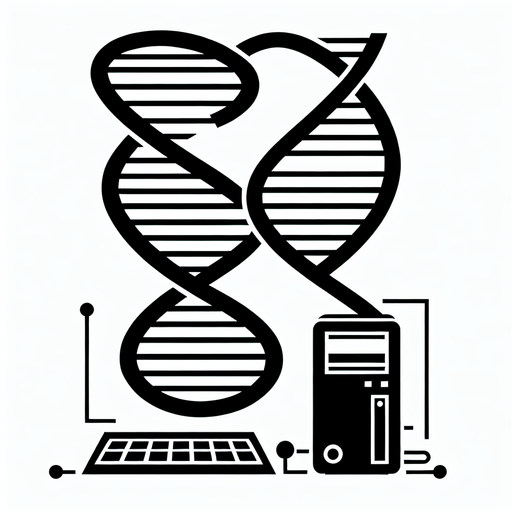

In 1965, Gordon Moore, co-founder of Intel, made a bold observation: the number of transistors on a computer chip was doubling approximately every two years, leading to exponential increases in computing power. This prediction, later dubbed Moore’s Law, became a guiding principle for the tech industry, driving innovations that shaped the modern world.

The origins of Moore’s Law trace back to a paper authored by Gordon Moore, published in Electronics Magazine on April 19, 1965. In response to a request from editor Lewis H. Young, Moore shared his vision for the future of electronics in an article that would become foundational for the industry. His insights captured the potential of semiconductor technology, laying the groundwork for decades of advancement.

Moore’s Law wasn’t just about hardware—it symbolized the pace of technological progress. From the bulky computers of the 1960s to the sleek smartphones of today, this exponential growth in chip power enabled advances in artificial intelligence, medical imaging, gaming, and beyond. It made devices faster, cheaper, and smaller, transforming how we live and work.

Though the pace of transistor scaling has slowed in recent years, Moore’s Law continues to inspire innovation, with breakthroughs like quantum computing and new chip architectures pushing the limits of possibility. More than just a prediction, it highlights how a simple rule of thumb can define an entire industry, shaping not just the technology itself but the aspirations and strategies of those who create it.

Craving more? Check out the source behind this Brain Snack!